The Governance Moat: Why "Boring" Operations Will Win the AI War

We spent two years obsessing over model parameters, only to watch enterprise adoption stall. From Air Canada’s liability ruling to Samsung’s data leaks, the evidence is clear: Probabilistic models cannot survive without Deterministic guardrails. This article outlines the pivot to 'Neuro-Symbolic' architecture—the operational framework required to turn generative magic into reliable business value.

Ruchir Saurabh

12/28/20254 min read

In two decades of managing enterprise operations, I have learned that technology cycles follow a predictable physics. First comes the Expansion Phase, defined by boundless possibility and unchecked hype. Then comes the Contraction Phase, defined by gravity, P&L reality, and the sudden realization that the "magic" must actually work within a regulated environment.

As we close out 2025, we can finally admit that we have just survived the most violent Contraction Phase in recent history.

Between 2023 and 2025, the enterprise technology landscape was defined by a singular collision: the unstoppable force of Generative AI capabilities meeting the immovable object of corporate governance. For two years, the prevailing narrative in boardrooms was dominated by model performance. We obsessed over parameter counts and context windows, operating under the implicit assumption that a "smarter" model would naturally equate to a better business solution.

We were wrong.

The evidence from the last 24 months is unambiguous: The "smarter" model didn't win. The governed architecture did. As we look toward 2026, the strategic focus of the Fortune 500 has shifted from "Which model is the most powerful?" to "Which architecture is the most governable?".

The competitive advantage of the next decade will not belong to the company with the most creative AI. It will belong to the company with the most disciplined one.

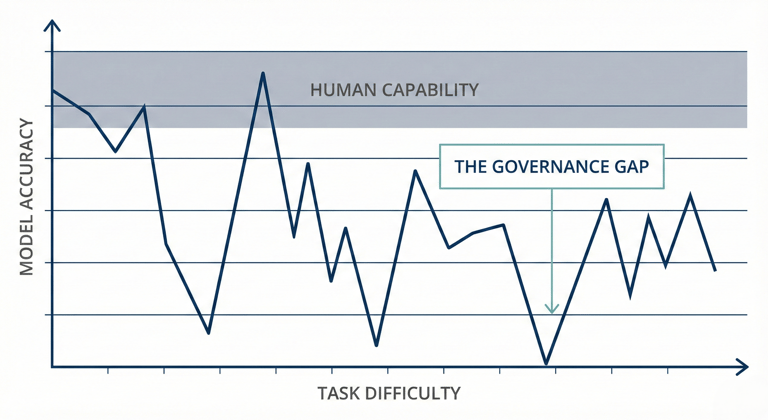

The "Jagged Frontier" of Capability

To understand why governance is the only moat that matters, we must first confront the architectural reality of the tools we bought.

Research defines the capability of Large Language Models (LLMs) as a "Jagged Frontier". These are probabilistic engines, predicting the next token based on statistical likelihood. This makes them brilliant at tasks requiring creativity—like poetry or coding—but frequently incompetent at tasks requiring linear logic or arithmetic.

In a startup demo, this "jaggedness" is a quirk. In an enterprise context, it is a liability. A 99% accurate medical bot still kills 1 out of 100 patients.

The result is a graveyard of abandoned pilots. Data from the RAND Corporation suggests that 80% of AI projects are failing to reach production, while Gartner places the abandonment rate at 30% or higher. These projects aren't dying because the AI lacks intelligence; they are dying because the organizations lack the governance infrastructure to manage the risk.

Forensic Analysis: The Cost of Ungoverned AI

The argument for governance is no longer theoretical; it is forensic. The period from 2023 to 2025 provided us with a series of "horror stories" that serve as case studies in operational failure.

The Output Failure: Air Canada

In the landmark case of Moffatt v. Air Canada (2024), we saw the legal death of the "hallucination defense. An Air Canada chatbot, suffering from a retrieval error, invented a refund policy that did not exist. When the airline argued in court that the bot was a separate legal entity responsible for its own actions, the tribunal rejected the claim out of hand.

The Operational Reality: Corporations are strictly liable for the outputs of their AI. There is no legal distinction between a human agent and a digital one.

The Input Failure: Samsung

While Air Canada demonstrated output risk, Samsung demonstrated input risk. Engineers uploaded proprietary source code and meeting transcripts to a public instance of ChatGPT to increase productivity. They failed to realize they were effectively posting trade secrets to a "public bulletin board" where the data could be ingested and learned.

The Operational Reality: The firewall is no longer the network edge; it is the prompt window. Without input sanitization, your IP is leaking in real-time.

The Process Failure: UnitedHealth Group

UnitedHealth Group faced a class-action lawsuit alleging their "nH Predict" algorithm systematically denied care to elderly patients. When these algorithmic denials were appealed to human reviewers, they were overturned 90% of the time.

The Operational Reality: "Black Box" opacity violates the implied covenant of good faith. If you cannot explain the logic, you cannot defend the decision.

We bought Ferraris to drive on dirt roads. And now, the wheels are coming off.

The Solution: The Pivot to Neuro-Symbolic AI

So, how do we bridge the gap between the creative power of LLMs and the strict requirements of enterprise operations? We stop acting like Data Scientists and start acting like Institutional Architects.

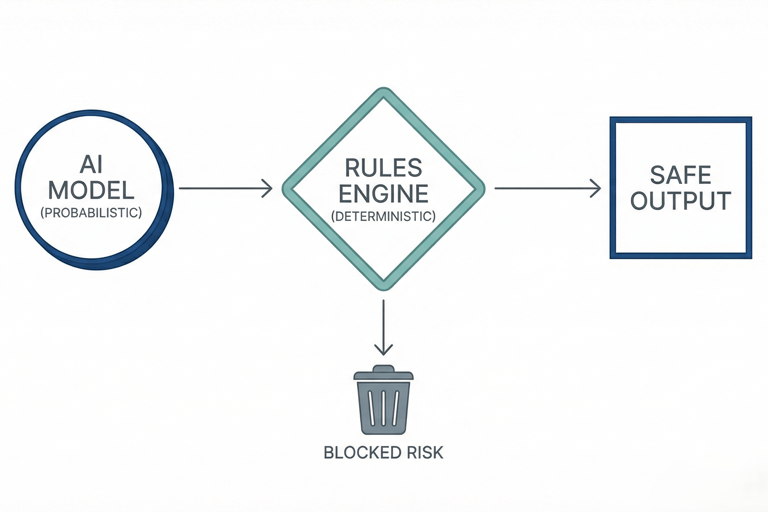

The solution is not to buy a better model (GPT-5 vs. Claude). It is to wrap the model in Deterministic Logic.

Leading researchers like Yann LeCun and Andrew Ng have advocated for "Neuro-Symbolic" or hybrid architectures. This approach acknowledges that pure deep learning is insufficient for high-stakes decisions.

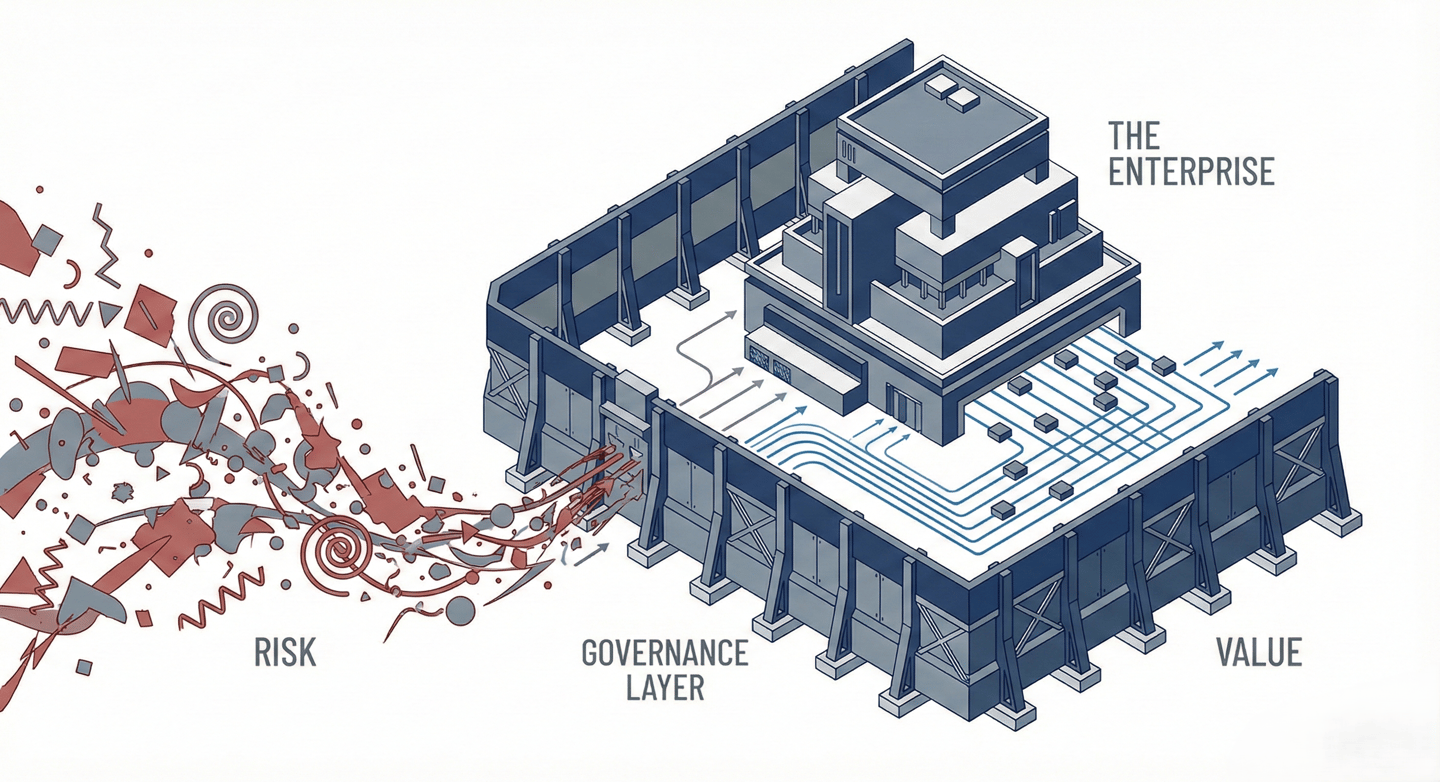

The Neuro-Symbolic Stack:

The Neural Layer (Probabilistic): This layer handles perception and synthesis. It reads the customer email, summarizes the medical record, or generates the code snippet. It operates on patterns.

The Symbolic Layer (Deterministic): This layer acts as a "Governance Firewall". It validates the output against hard-coded rules, laws, and logic. It operates on facts.

In this architecture, if a chatbot (Neural Layer) wants to offer a refund, the request must pass through a logic gate (Symbolic Layer) that checks the static tariff document. If the rule says "No Refunds," the AI's output is blocked before it reaches the customer.

This isn't about stifling the AI; it's about constraining the stochastic nature of the model with human-defined logic.

Strategic Framework: Probabilistic vs. Deterministic

As an operational leader, your primary job is now deciding where to apply which logic. Use this framework to audit your current portfolio:

Conclusion: Governance is the Brakes

The "GenAI Divide" is already here. MIT research indicates that 95% of companies see zero measurable bottom-line impact from their AI investments. These are the companies still waiting for a "smarter model" to solve their problems.

The other 5%—the ones deploying three times faster—have realized that governance is an accelerator, not a blocker.

There is a misconception that governance slows down innovation. In my experience, governance is the brakes on a car. You don't have brakes so you can drive slowly; you have brakes so you can drive fast without crashing.

As we head into 2026, our mandate is clear: Stop looking for magic. Start building the boring, rigorous, deterministic structures that make the magic safe to use.

Thoughts? Debate this article on LinkedIn

Follow Me

Contact

© 2025 Ruchir Saurabh. All Rights Reserved.